COMMENTARY

A framework for the governance of AI in healthcare for India: An exploratory model

Shambhavi Naik, Bharath Reddy

Published online first on May 8, 2025. DOI:10.20529/IJME.2025.037Abstract

AI-enabled healthcare presents exciting opportunities for better healthcare outcomes in India. The governance of any emerging technology can take multiple forms; mitigating risks while incentivising applications will require various policy instruments. Understanding public interest and market failures, such as the under-provision of goods with positive externalities like vaccines, helps identify broad governance priorities. Further, analysing AI’s impact on specific tasks across different time horizons clarifies its risks and opportunities. Such an analysis reveals governance opportunities — creating better datasets, building accountability in healthcare systems, and upskilling medical personnel to achieve enhanced healthcare outcomes. As AI develops alongside other technologies in the healthcare space, periodic reviews will be necessary to assess their holistic, rather than individual, impacts. A short-, medium-, and long-term outlook for integrating AI in India is essential to understand its underlying ethical concerns and unintended consequences, and to effectively manage potential risks.

Keywords: AI, healthcare, governance, ethics, policy

Introduction

Health is a key determinant of quality of life, and ensuring access to appropriate healthcare is thus a priority for the government. In India, various aspects of health are governed by the state and union governments. The Indian government’s expenditure on healthcare is forecasted to increase to 2.5% of the Gross Domestic Product (GDP) by 2025, with investments in infrastructure; research in healthcare innovations, such as medical devices and precision medicines; and training of medical staff [1]. However, it still needs to address several challenges linked to inequitable healthcare delivery, sub-optimal healthcare outcomes, and financial stress through out-of-pocket expenses [2, 3]. Improving India’s healthcare outcomes requires interventions to upgrade primary health infrastructure, reduce healthcare delivery disparities across urban and rural areas, and fill vacancies for qualified medical personnel [4].

As the government executes various health-related responsibilities, it plays the roles of policymaker, regulator, and service provider, which may sometimes be conflicting or even overlapping. Adopting new-age technologies can improve its capacity to perform these roles and partly address entrenched health inequities. Much like cutting-edge biomedical technologies, such as clustered regularly interspaced short palindromic repeats (CRISPR), artificial intelligence (AI) — when applied appropriately — is a potentially powerful tool to improve healthcare delivery and outcomes.

AI tools present many opportunities in healthcare: as presented in the case studies in this paper, they can augment healthcare capacity, improve prognoses, reduce costs, and personalise healthcare [5]. AI applications have been trialled with success across the healthcare sector, including in biomedical research [6], diagnosis, medical interventions, public health, and healthcare administration [7]. They are also expected to revolutionise public health research and practice [8], a government prerogative.

However, risks can emerge during development and deployment of AI tools for healthcare. For example, discrepancies between training data and real-world datasets could lead to inaccurate diagnoses. Biases might arise from inadequate representation in training data in terms of gender, race, age, location, or socioeconomic factors. AI systems can amplify such biases, creating reinforcing feedback loops when the data they generate is used to train future models. As AI systems scale-up, we need to guard against the reliance on automated decision-making systems while ignoring contradictory information. Other concerns pertain to transparency, accountability, privacy, and security in employing AI systems. There is also anxiety that the adoption of AI can lead to job losses, particularly for roles involving repetitive, rule-based tasks. AI should not be considered a silver bullet to solve all healthcare-associated issues, as its mis-deployment can hamper healthcare outcomes. Therefore, it is necessary to contextualise the use of AI to Indian healthcare requirements, the availability of necessary infrastructure and expertise, and the consent dynamics of the patients and medical personnel involved. For example, AI-enabled tools that use English might not be effective for non-English speakers. Uninformed patients might consider it necessary to supply their data to such tools without understanding the underlying risks.

The process of building AI systems can be construed as a supply chain, with applications built on top of models trained on data using computation resources. Our findings on AI governance indicate that each input to building AI has governance implications [9]. Some of these insights inform the governance framework that we present in this paper.

Given the immense potential of AI to transform healthcare, it is necessary that countries use appropriate governance methods to promote its benefits while managing the risks associated with its use. The US has chosen to regulate AI through the combination of an executive order [10] that identifies riskier models, based on computing requirements and data needs, and additional sectoral regulations. The Food and Drug Administration (FDA) is the nodal agency for approving AI-enabled healthcare devices that come to market. In contrast, the regulatory landscape of European Union (EU) has a more cohesive set of rules that work in a centralised manner to provide AI oversight. Taking a risk-based approach, the EU AI Act [11] prohibits the use of AI in certain areas (such as facial recognition and hiring), while other uses of AI, such as in healthcare, are classified into risk categories with varying levels of regulation and compliance.

In India, the Medical Device Rules, 2017, and its 2020 amendment expand the definition of a medical device to include any software or accessory intended for medical use [12]. In addition, the Indian Council of Medical Research, (ICMR) in its Ethical Guidelines for Application of Artificial Intelligence in Biomedical Research and Healthcare, outlines the ethical issues associated with the use of AI in healthcare in India [13]. The Guidelines also note the need for more nuanced governance to validate and deploy various AI applications in healthcare.

It is prudent that as the technology evolves, it is assessed on the net benefit-to-risk potential of each application. We propose a framework to identify the governance priorities and mechanisms that can balance the opportunities and risks of adopting AI in healthcare. We also examine three use cases using specific analytical approaches to understand the ethical questions arising from various applications of AI in healthcare, identify governance priorities, and suggest possible policy instruments to achieve them.

Framework 1: Identifying broad governance questions and priorities

The state’s governance priorities can be broadly deduced by identifying market failures — such as the inefficient allocation of goods and services by a free market, leading to sub-optimal outcomes for society. In the case of AI in healthcare, these can generally take three forms — externalities, public goods, and information asymmetry.

Externalities arise when economic activities have unintended positive or negative impacts on third parties not involved in the transaction. For example, vaccination-led herd immunity benefits everyone, including those not vaccinated. In contrast, pollution emitted by an industry negatively impacts the health of the surrounding community. Relying on the private market to manage externalities might lead to sub-optimal vaccination levels or uncontrolled pollution, leaving populations susceptible to infections and diseases.

Public goods is an economics term that refers to goods that are non-rivalrous and non-excludable; individuals can benefit without paying for them, leading to under-provisioning by the market. Clean air, streetlights, and open-access public health datasets are examples of such goods. Healthcare itself is not a public good. The private sector may lack incentives to address health issues that disproportionately affect the poor. Governance priorities in such areas should primarily aim to incentivise the private sector or, if needed, entrust government agencies to create such goods.

Information asymmetry occurs when one party in a transaction has more information than the other. In the case of AI in healthcare, such asymmetry could occur in relationships between the technology provider, healthcare provider, and patients. The healthcare provider and patients might be unaware of the technology’s limitations, which could hinder their ability to make informed decisions. Governance mechanisms to address this market failure should aim to increase disclosure and promote accountability.

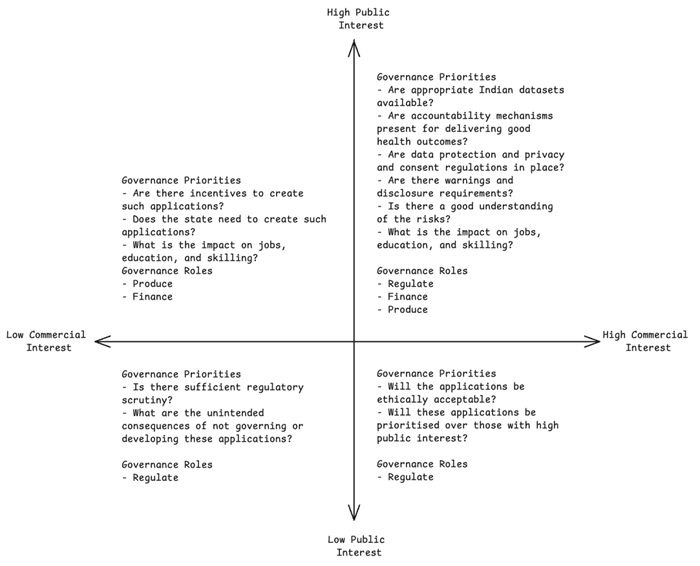

A useful tool to identify broad governance priorities is a 2×2 matrix (Figure 1) with one axis representing commercial interest and the other axis representing public interest. Commercial interest represents market opportunities for AI technologies. Public interest is the benefits that society stands to accrue from technology. This includes factors such as public health and safety, equity, and accessibility. The government can use such a matrix to determine governance priorities across various AI applications, identifying where it might need to play the role of a policymaker, regulator, or service provider. We assess a few applications as examples, but this matrix can be extended to all applications under the government’s consideration.

Figure 1. Framework for identifying governance priorities and roles by mapping applications on public interest and commercial interest (Source: Authors’ analysis)

Examples of AI applications with high public interest and high commercial interest include those used for medical imaging, drug discovery, healthcare administration. The governance objective for this category is addressing information asymmetry. This can be achieved through various instruments aimed at enhancing transparency and accountability — for example, the EU AI Act’s requirements that all high-risk AI systems ensure transparency in the provision of information from developers to deployers, adopt appropriate human oversight tools, undergo third-party audits, and identify and disclose risks to health, safety, and fundamental rights [14]. Similarly, in the US, the National Institute of Standards and Technology has published an AI risk management framework, intended for voluntary use, to improve trustworthiness of AI systems [15]. The Center for Medicare and Medicaid Services in the US has enabled the safe utilisation of AI in healthcare, by requesting information to improve healthcare outcomes and service delivery [16]. It also requires Medicaid Advantage organisations to make coverage decisions based on individual cases and not generalised algorithms [17].

In addition, it is important to incentivise goods with positive externalities. Examples include the US Center for Disease Control and Prevention’s Data Modernisation Initiative [18] or the EU’s open health datasets [19] which have wide societal benefits. High-quality datasets that represent the India’s diverse population and health conditions are a key enabler for innovation. For example, the union government has already created anonymised datasets of Indian genomes for drug development [20]. Investments in education and skills development are necessary to support the advancement of AI technologies in these areas. Thus, for AI applications in this quadrant (see Figure 1), the primary objective should be to create robust governance; the secondary objective must be to incentivise applications where public interest may outweigh commercial interests.

AI applications with high public interest but low commercial interest include those used for public health, preventive health, or the treatment of health conditions that disproportionately impact populations with low purchasing power. The development and deployment of such applications can be encouraged through financial incentives or procurement guarantees that can help alleviate demand uncertainty and investment risks. Such incentives were used effectively to fast-track the creation of Covid-19 vaccines [21].

AI applications with low public interest but high commercial interest include automation of medical claims processing or more futuristic applications, such as human enhancement or brain-computer interfaces pioneered by Neuralink [22], which enables restoring mobility for paralysed individuals. The governance objectives for this category of applications could include addressing ethical considerations through transparency and accountability measures. The government’s primary role should be that of a regulator, responsible for ensuring that appropriate safety guardrails are in place.

Lastly, AI applications with low public interest and low commercial interest could include those that are related to rare diseases. Although these might not have huge market potential, there should be an effort to understand the consequences of not prioritising these applications. However, if these applications are developed through individual interest, there should be regulatory frameworks to ensure transparency and accountability. The primary governance objective for these applications would be to create regulatory pathways that reduce entry barriers while also safeguarding the population.

Framework 2: A task-based framework for guiding governance of AI in healthcare

This section proposes a task-based framework to further delineate the ethical concerns associated with AI-based healthcare interventions. It shifts the focus from examining AI’s overall impact on the entire healthcare ecosystem to analysing its impact on specific healthcare tasks over the short, medium, and long terms. Using this framework, we can identify the key requirements for AI applications to work effectively in the Indian context and develop governance strategies to address potential ethical concerns.

This framework proposes breaking down the healthcare process into individual tasks to better analyse the specific functions impacted by AI. The impacts can be categorised as replacing human capacity in performing a task, augmenting human capacity in performing a task, or creating new capabilities that humans cannot perform alone. By projecting these impacts over the short, medium, and long term, policymakers can make better-informed decisions about necessary actions. The following three examples illustrate how this framework can be applied.

Use of AI in medical imagingAI is set to revolutionise diagnostic imaging by improving diagnostic precision, saving physicians’ time, and enabling better health outcomes [23]. However, its use in India has to consider existing disparities in current healthcare systems and training on appropriate datasets. In urban areas, where there is a high specialist-to-patient ratio, this application would be in the high commercial interest–low public interest quadrant from Figure 1, but in rural areas, where access to specialists may be limited, there may be more public interest, requiring the government to actively intervene and deploy such tools.

Table 1 shows the task-based analysis for identifying the impact of AI in medical imaging. We can assess the impact of AI in imaging using the following framework

Table 1. Task-based framework for identifying the impact of AI in medical imaging

|

Use case: Medical imaging |

Short-term impact (0–10 years) |

Medium-term impact (10–20 years) |

Long-term impact (>20 years) |

|

Preparing patients for imaging |

Does not impact current capacity |

May be assisted by robotics |

AI and robotics together may replace manual human function, but human oversight functions will remain |

|

Preparing the instrument for imaging |

Does not impact the current capacity |

May be impacted by robotics |

May be replaced by robotics, but human oversight functions will remain |

|

Recording image |

Will be augmented by AI |

Will be augmented by AI |

Will be augmented by AI |

|

Image processing |

Augments current capacity, reduces time for processing, and extracts data better than the human eye |

Time for processing will further reduce, cost of image processing will also likely reduce |

AI will be the routine gold standard for processing |

|

Diagnosis |

Augments current capacity, improves diagnosis, reduces the time for diagnosis and likely cost |

|

|

|

Report generation |

Capability addition |

|

|

Opportunity analysis

AI in medical imaging augments the physician’s capacity by increasing the scope of images that can be examined [24, 25] and potentially reducing diagnosis time. AI tools could be developed to diagnose diseases from poor-quality images and identify patterns that are not visible to the human eye. AI may be able to diagnose with better accuracy from lower resolution images, spurring innovation in low-cost imaging equipment that can be deployed at scale across the country.

While AI is unlikely to replace human jobs, in combination with robotics, it could impact jobs in future scenarios. However, this is currently unlikely, given the costs of such technologies.

Risk analysisOverreliance on automated decision-making systems leads to automation bias, where specialists and technicians do not exercise their diagnostic skills and ignore contradicting information. Having a “human in the loop” will not be enough to address this problem. Effective use will require workflow redesign and upskilling of personnel to use AI systems to augment their capabilities while retaining their independent judgement [26]. The effectiveness of AI in medical imaging depends on algorithms developed with appropriate datasets, similar to clinical trials for new drugs. Accountability mechanisms have to be clear; while the certifying doctor is accountable for the diagnosis, the role of AI developers and medical institutions must be clarified.

Another ethical concern is the prioritising of common diseases for AI solutions over rare diseases or those affecting poorer socioeconomic groups, exacerbating disparities. Further, faster AI adoption in regions such as the US or EU may lead affluent Indian patients to consult foreign doctors with advanced assessment tools and discouraging Indian companies from developing AI solutions suited to local needs.

Governance considerationsAI algorithms should be trained on Indian datasets. Regulatory standards should cover dataset quality, algorithm efficacy, and explainability. An AI-accelerated approach with a human in the loop can enable the deployment of AI tools while ensuring accountability of the medical decision and building trust in the AI tool [27]. The National Digital Health Mission (NDHM) can fund research for AI tools in areas lacking private-sector interest. Appropriate anonymisation protocols and consent mechanisms need to be formulated to ensure the privacy and autonomy of donors.

Use of AI in drug discoveryAI is a powerful tool and, if used effectively, could reduce drug discovery costs. In India, this application should be promoted to facilitate research on unmet medical needs. Using AI in drug discovery could accelerate research, create jobs and, over time, set up a domestic pipeline from research to manufacturing. AI in drug discovery could belong to any quadrant in Figure 1, depending on the condition being targeted.

Table 2 shows a task-based analysis of the impact of AI in drug discovery. We can assess the impact of AI in drug discovery using the following framework.

Table 2. Task-based framework for identifying the impact of AI in drug discovery

|

Use case: AI in Drug discovery |

Short-term impact (0–10 years) |

Medium-term impact (10–20 years) |

Long-term impact (>20 years) |

|

Identification and validation of targets |

Expedites research, expands scope by identifying new targets, reduces costs through improved prediction capabilities, and creates new job opportunities |

May lead to the rehaul of the basic drug discovery pipeline, eventually increasing the number of scientists cross-trained in drug development and AI applications and reducing the requirement of other scientists |

May lead to the rehaul of the basic drug discovery pipeline, eventually increasing the number of scientists cross-trained in drug development and AI applications and reducing the requirement of other scientists |

|

Preclinical phase |

May reduce the time and cost of preclinical trials by improving toxicity prediction |

In conjunction with other technologies, such as organs-on-chips, may replace animal testing. Could lead to the loss of jobs for those maintaining animal houses but create laboratory jobs for other forms of testing |

Actual wet lab testing may be reduced to some essential tests only |

|

Clinical trials |

Reduces costs by streamlining patient selection, increases compliance through better monitoring, and enhances scope by identifying drugs for repurposing |

May automate patient recruitment and monitoring, creating a new pipeline for setting up clinical trials |

If combined with robotics, may further impact jobs of technicians involved in clinical trial administration and monitoring |

Opportunity analysis

Using AI in drug discovery expands the scope of research and reduces costs through improved efficiency. Currently, drug discovery takes up to 15 years and costs US$1–2 billion per approved drug [28]. Over 85% of candidate drugs tested during 2000–2015 failed to achieve their expected impacts [29]. AI can streamline this process by improving the assessment of the biological activity of candidate drugs [30], predicting their toxicity [31], and analysing drug–drug interactions [32]. High costs, lengthy timelines, and limited clinical development experience lead companies to pursue out-licensing deals instead of developing drugs independently [33, 34]. Narayanan Venkatasubramanian, CEO and co-founder of Peptris — a biotech startup using AI for expediting drug delivery in oncology, inflammation, and rare diseases — estimates that their technology could reduce the pre-clinical phase from five years to one and a half years and cut expenses from US$700 million to US$400,000 [35]. These reduced barriers to research will result in increased intellectual property creation in India and help in drug discovery for treatments that can help address India’s unmet medical needs. The lowered cost of medicines will also make healthcare more affordable.

Risk analysisAI-powered drug discovery depends on the analysis of large datasets. For effective use in the preclinical and clinical phases, these datasets must represent various Indian genetic subgroups. Challenges include the lack of structured datasets, interoperability issues, insufficient open medical datasets, and inadequate analytics solutions for big data [36]. Additionally, India’s health system is not homogenous and incorporates modern medicine alongside traditional systems. In September 2024, the World Health Organization Global Traditional Medicine Centre (GTMC) and Digital Health and Innovation (DHI) organised a global meeting on AI applications in traditional medicine at the All India Institute of Ayurveda (AIIA) in New Delhi [37]. Participants decided that integrating AI into traditional medicine requires further study and agreed to develop plans for AI applications to support learning and understanding among traditional medicine stakeholders. This step raises questions regarding ownership of traditional medicine data, and benefits sharing. There is also a concern that private companies may neglect therapies for rare diseases. Like AI in imaging, AI in drug discovery should also consider the accountability of the AI-generated data and conduct adequate safety/toxicity tests to support the AI-driven candidate.

Governance considerationsThe use of AI in drug discovery can enable India to build drugs by lowering entry barriers for research on unmet needs. Where a market exists, the government can facilitate AI usage by setting standards and streamlining regulatory approvals. Publicly funded databases, such as the Department of Biotechnology’s 10,000 genome project, should be made available to the public with safeguards ensuring donor anonymity and consent. Standards and rules for pre-clinical and clinical trials may need amendments to include AI-based processes. Besides regulatory changes, direct government action through research funding can incentivise research on drugs for neglected diseases. Overall, regulatory clarity on integrating AI tools into existing drug discovery processes can expedite both tool development and drug discovery. The ICMR’s current ethical framework on the use of AI is limited to tools created for all biomedical research and applications involving human participants and/or their biological data. This limitation could render impactful research on pathogens or animal/plant health beyond the remit of the guidelines. Therefore, there is a need to consider the use of AI in healthcare more holistically.

Use of AI in patient engagementSustained patient engagement heavily influences public health and healthcare outcomes [38]. In India, where the doctor–patient ratio is poor and inequitably distributed, AI can help manage time for both patients and medical staff, streamline communication channels, and prioritise medical needs. In this example, we will look at three possible tasks that can smoothen patient engagement for better health outcomes. The use of AI in patient engagement could fall into the low commercial interest–high public interest quadrant when deployed in rural areas or for certain tasks, such as addressing generic patient concerns.

Table 3 shows a task-based analysis of the impact of AI on patient engagement. We can assess the impact of AI in drug discovery using the following framework.

Table 3. Task-based framework for identifying the impact of AI in patient engagement

|

Use case: AI in Patient engagement |

Short-term impact (0–10 years) |

Medium-term impact (10–20 years) |

Long-term impact (>20 years) |

|

Scheduling |

Will help patients locate the nearest available medical doctor. Combined with telemedicine, could help create a pipeline for patients needing urgent action and those who can be helped through virtual means |

Will help in understanding underserved areas or institutions with long patient waiting times. Should also reduce patient waiting time overall |

Assisted by other technologies, such as wearables and telemedicine, will improve scheduling for patients and manage time of doctors, nurses, and ASHA workers time |

|

Follow-up/Routine reminders |

Will lead to better patient compliance with medical prescriptions and better healthcare outcomes. May also nudge behavioural changes in patients, helping improve public health outcomes and compliance with routine activities, such as vaccination schedules or medical appointments for expectant mothers |

May improve population health as the system expands from healthcare advice to more general public health advice |

Widespread adoption of this system could reduce the burden on medical staff, particularly ASHA workers, freeing up their time for other work |

|

Addressing patient concerns |

Could help address patients' queries about health, medications, etc. and lead to better health outcomes and reduce the time medical staff spends on them |

Along with wearables, can be used to provide more accurate medical information to a patient about their health and potential medical needs |

Over time, such tools may become the first port of call for patients. Could also serve as data repositories for the development of advanced AI tools |

Opportunity analysis

The use of AI in managing patient engagement [39] can greatly reduce the workload of India’s existing medical staff, including doctors, nurses, ASHA workers, and administrators. Such systems are already used across various countries, including at a few hospital chains in India. This use case may be particularly relevant in remote areas where the doctor–patient ratio is poor and patients have to travel great distances to seek medical help. The use of AI in scheduling helps doctors and technicians manage time and allow patients to easily find the nearest available medical centre. Over time, the data collated through these systems can help discover underserved areas where further government interventions might be required. AI-enabled patient engagement can help patients comply with medical interventions, resulting in better health outcomes for the community.

Risk analysisThe delivery of AI-enabled tools for patient engagement depends on robust access to the internet or other telecommunication services, which may not be reliable in remote areas. Relying on AI tools under such conditions could lead to a false sense of security and assumed patient compliance. Datasets created to operationalise these tools will contain sensitive patient personal and health data and therefore need strict protocols to ensure privacy. It is important to provide clarity on the explainability and liability of AI tools addressing patient concerns. In addition, it is necessary to educate the public that an AI tool cannot be a replacement for medical staff; it can only augment their functions.

Governance considerationsThe use of AI for processes such as reminders of national health campaigns or secure patient compliance can be easily adopted into India’s existing health missions. The National Health Data Management Policy can be extended to provide support to AI tools, protect the personal and health data of consumers, and ensure compliance with India’s data protection law. The use of AI to address patient concerns would require the generation of India-specific datasets and standards. The widespread adoption of such tools may lead to some surplus in the existing medical workforce, particularly of medical support staff. The application of AI could augment their services in remote areas but replace some of their functions in urban areas with an already high doctor–patient ratio. Thus, the rollout of these tools must be gradual, allowing for the reskilling of support staff and their assignment to other critical tasks.

Conclusion

AI-enabled healthcare presents exciting opportunities to enable better healthcare outcomes in India. The governance of this emerging technology can take multiple forms, such as building guardrails, encouraging transparency and disclosure, engaging stakeholders, or incentivising particular applications.

We propose two frameworks to identify suitable policy interventions and balance opportunities and risks. The first framework involves identifying governance priorities by understanding market failures and public and commercial interests in relation to technologies. The second framework involves breaking down the healthcare workflow into specific tasks and analysing the trade-offs involved in adopting AI for these tasks across different time horizons. Our analysis reveals governance opportunities such as creating better datasets, building accountability in healthcare systems, and upskilling medical personnel to achieve enhanced healthcare outcomes. The limitations of governing such a technology involve anticipating some of its unknown risks including an overreliance on technology.

The challenges to effectively governing AI in healthcare include building regulatory capacity to identify stakeholder concerns and choosing the right policy instruments to address them. This needs to be a balancing act between safeguarding public interest while also not stifling innovation.

As AI develops along with other technologies in the healthcare space, periodic reviews will be needed to assess their impacts holistically. A short-, medium-, and long-term outlook for integrating AI, contextualised to India, is essential to understand its underlying ethical concerns and unintended consequences and to effectively manage potential risks.

Authors: Shambhavi Naik ([email protected]), Head of Research and Chairperson of the Advanced Biology programme, Takshashila Institution, Church Street Bengaluru, Karnataka 560 001, INDIA; Bharath Reddy (corresponding author — [email protected]), Associate Fellow, High-Tech Geopolitics Programme, Takshashila Institution, Church Street Bengaluru, Karnataka 560 001, INDIA.

Authors’ note: The authors have used ChatGPT for copy-editing purposes at their end.

Conflict of Interest: None declared Funding: None

Acknowledgments: The authors would like to acknowledge Rijesh Panicker for his significant contributions to the ideation and design of this paper. Rijesh is a Fellow of the High-Tech Geopolitics programme at the Takshashila Institution.

To cite: Naik S, Reddy B. A framework for the governance of AI in healthcare for India: An exploratory model Indian J Med Ethics. Published online first on May 8, 2025. DOI: 10.20529/IJME.2025.037

Manuscript Editors: Manjulika Vaz, Sunita Bandewar

Peer Reviewers: Varsha Barde and an anonymous reviewer

Copy Editing: This manuscript was copy edited by The Clean Copy.

Copyright and license

©Indian Journal of Medical Ethics 2025: Open Access and Distributed under the Creative Commons license (CC BY-NC-ND 4.0), which permits only noncommercial and non-modified sharing in any medium, provided the original author(s) and source are credited.

References

- Kumar A. The transformation of the Indian healthcare system. Cureus. 2023 May 16;15(5):e39079. https://doi.org/10.7759/cureus.39079

- Sriram S, Albadrani M. Impoverishing effects of out-of-pocket healthcare expenditures in India. J Family Med Prim Care. 2022 Nov;11(11):7120–7128. https://doi.org/10.4103/jfmpc.jfmpc_590_22

- Ambade M, Sarwal R, Mor N, Kim R, Subramanian SV. Components of out-of-pocket expenditure and their relative contribution to economic burden of diseases in India. JAMA Netw Open. 2022 May 2;5(5):e2210040. https://doi.org/10.1001/jamanetworkopen.2022.10040

- Baru RV, Bisht R. Health service inequities as challenge to health security. New Delhi: Oxfam India; 2010 Sept [cited 2024 Oct 15]. Report No: OIWPS – IV. Available from: https://www.oxfamindia.org/sites/default/files/2018-10/IV.%20Health%20Service%20Inequities%20as%20Challenge%20to%20Health%20Security.pdf

- Alowais SA, Alghamdi SS, Alsuhebany N, Alqahtani T, Alshaya AI, Almohareb SN, et al. Revolutionizing healthcare: the role of artificial intelligence in clinical practice. BMC Med Educ. 2023 Sep 22;23(1):689. https://doi.org/10.1186/s12909-023-04698-z

- A, Virmani T, Pathak V, Sharma A, Pathak K, Kumar G, Pathak D. Artificial intelligence-based data-driven strategy to accelerate research, development, and clinical trials of COVID vaccine. BioMed Res Int. 2022 Jul 6; 2022(1):1-16. https://doi.org10.1155/2022/7205241

- Han R, Acosta JN, Shakeri Z, Ioannidis JPA, Topol EJ, Rajpurkar P. Randomised controlled trials evaluating artificial intelligence in clinical practice: a scoping review. Lancet Digit Health. 2024 May;6(5):e367–e373. https://doi.org/10.1016/S2589-7500(24)00047-5

- Mooney SJ, Vikas P. Big data in public health: terminology, machine learning, and privacy. Annu Rev Public Health. 2018 April 1;39:95–112. https://doi.org/10.1146/annurev-publhealth-040617-014208

- Reddy B, Pai N, Panicker R, Sahu SS, Krishna S. Takshashila discussion document – a pathway to AI governance. Bengaluru: The Takshashila Institution; 2024[cited 2024 Oct 15]. Available from: https://takshashila.org.in/research/a-pathway-to-ai-governance

- Executive order in the safe, secure, and trustworthy development and use of artificial intelligence of 2023. Washington DC: The White House; 2023 Oct 30[cited 2024 Oct 15]. Executive Order 14110. Available from: https://www.federalregister.gov/documents/2023/11/01/2023-24283/safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence

- Artificial Intelligence Act of 2024. EU Parliament and Council. 2024/1689. 2024 Jul 12[cited 2025 May 1]. Available from: https://eur-lex.europa.eu/legal-content/EN/LSU/?uri=oj:L_202401689

- Manu M, Anand G. A review of medical device regulations in India, comparison with European Union and way-ahead. Perspect Clin Res. 2022 Jan–Mar;13(1):3–11. https://doi.org/10.4103/picr.PICR_222_20

- Indian Council of Medical Research. Ethical guidelines for application of artificial intelligence in biomedical research and Healthcare. New Delhi: Indian Council of Medical Research; 2023. ISBN: 978-93-5811-343-3.

- Artificial Intelligence Act of 2024. EU Parliament and Council. 2024/1689. 2024 Jul 12[cited 2025 May 1]. Available from: https://eur-lex.europa.eu/legal-content/EN/LSU/?uri=oj:L_202401689

- National Institute of Standards and Technology. AI risk management framework. Gaithersburg: National Institute of Standards and Technology; 2023 [cited 2024 Oct 15]. Available from: https://www.nist.gov/itl/ai-risk-management-framework/nist-ai-rmf-playbook

- Centers for Medicare & Medicaid Services. Request for information on artificial intelligence technologies for improving health care outcomes and service delivery. Baltimore: Centers for Medicare & Medicaid Services; 2024 [cited 2024 Oct 15]. Available from: https://www.cms.gov/digital-service/artificial-intelligence-demo-days

- Mello, MM, Sherri R. Denial – artificial intelligence tools and health insurance coverage decisions. JAMA Health Forum. 2024;5(3): e240622. https://doi.org/10.1001/jamahealthforum.2024.0622

- US Centres for Diseases Control and Prevention. Data modernization initiative (DMI). Decatur: Public Health Informatics Institute; 2024 [cited 2024 Oct 15]. Available from: https://www.cdc.gov/data-modernization/php/about/dmi.html

- European Data. Open health data on the European Data Portal. Europe: European Union; c2019 [cited 2024 Oct 15]. Available from: https://data.europa.eu/en/publications/datastories/open-health-data-european-data-portal

- Dutt A. Explained: The Genome India project, aimed at creating a genetic map of the country. The Indian Express; 2024 [cited 2024 Oct 15]. Available from: https://indianexpress.com/article/explained/explained-health/creating-indias-genetic-map-9187160/

- Lalani HS, Avorn J, Kesselheim AS. US taxpayers heavily funded the discovery of COVID-19 vaccines. Clin Pharmacol Ther. 2022 Mar;111(3):542–544. https://doi.org/10.1002/cpt.2344

- Neuralink. Neuralink – pioneering brain computer interfaces. 2024 [cited 2024 Oct 15]. Available from: https://neuralink.com/

- Mohamed K, Mona Albadawy. AI in diagnostic imaging: Revolutionising accuracy and efficiency, computer methods and programs. Biomedicine Update, 2024;5:100146. https://doi.org/10.1016/j.cmpbup.2024.100146

- Arbabshirani MR, Fornwalt BK, Mongelluzzo GJ, Suever JD, Geise BD, Patel AA, et al. Advanced machine learning in action: identification of intracranial hemorrhage on computed tomography scans of the head with clinical workflow integration. NPJ Digit Med. 2018 Apr 4;1:9. https://doi.org/10.1038/s41746-017-0015-z

- Liu Y, Wen Z, Wang Y, Zhong Y, Wang J, Hu Y, et al. Artificial intelligence in ischemic stroke images: current applications and future directions. Front Neurol. 2024 Jul 10;15:1418060. https://doi.org/10.3389/fneur.2024.1418060

- Pesapane, F, Codari, M, Sardanelli, F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2:35. https://doi.org/10.1186/s41747-018-0061-6

- Hasani N, Morris MA, Rhamim A, Summers RM, Jones E, Siegel E, et al. Trustworthy artificial intelligence in medical imaging. PET Clin. 2022 Jan;17(1):1–12. https://doi.org/10.1016/j.cpet.2021.09.007

- Ashenden SK. Introduction to drug discovery. In: Ashenden SK. The era of artificial intelligence, machine learning, and data science in the pharmaceutical industry. Cambridge: Academic Press; 2021. ISBN: 9780128200452

- Wong CH, Siah KW, Lo AW. Estimation of clinical trial success rates and related parameters. Biostatistics. 2019 Apr 1;20(2):273–286. https://doi.org/10.1093/biostatistics/kxx069. Erratum in: Biostatistics. 2019 Apr 1;20(2):366. https://doi.org/10.1093/biostatistics/kxy072

- Hansen K, Biegler F, Ramakrishnan R, Pronobis W, von Lilienfeld OA, Müller KR, et al. Machine learning predictions of molecular properties: accurate many-body potentials and nonlocality in chemical space. J Phys Chem Lett. 2015 Jun 18;6(12):2326–31. https://doi.org/10.1021/acs.jpclett.5b00831

- Tran TTV, Surya Wibowo A, Tayara H, Chong KT. Artificial intelligence in drug toxicity prediction: recent advances, challenges, and future perspectives. J Chem Inf Model. 2023 May 8;63(9):2628–2643. https://doi.org/10.1021/acs.jcim.3c00200

- Zhang Y, Deng Z, Xu X, Feng Y, Junliang S. Application of artificial intelligence in drug–drug interactions prediction: a review. J Chem Inf Model. 2024 Apr 8;64(7):2158–2173. https://doi.org/10.1021/acs.jcim.3c00582

- Thomas B, Chugan PK, Srivastava D. Pharmaceutical research and licensing deals in India. Macro and micro dynamics for empowering trade, industry and society. New Delhi: Excel India Publishers; 2016 Jan. ISBN: 978-93-85777-07-3.

- Differding E. The drug discovery and development industry in India – two decades of proprietary small-Molecule R&D. Chem Med Chem. 2017 Jun 7;12(11):786–818. https://doi.org/10.1002/cmdc.201700043

- Jacob, S. 2023. AI makes mark in drug discovery in India; start-up Peptris raises $1 mn. Business Standard. 2023 Dec 20 [cited 2024 Oct. 15]. Available from: https://www.business-standard.com/industry/news/ai-makes-mark-in-drug-discovery-in-india-start-up-peptris-raises-1-mn-123122000671_1.html

- Gujral G, Shivarama J, Muthiah M. Artificial intelligence and data science for developing intelligent health informatics systems. National Conference on Artificial Intelligence in Health Informatics and Virtual Reality; 2020 Jan; Mumbai. 2020 [cited 2024 Oct. 15]. Available from: https://www.researchgate.net/publication/338375465

- World Health Organization. Kicking off the journey of artificial intelligence in traditional medicine. World Health Organization. 2024 Sept 13 [cited 2024 Oct. 15]. Available from: https://www.who.int/news/item/13-09-2024-kicking-off-the-journey-of-artificial-intelligence-in-traditional-medicine

- Marzban S, Najafi M, Agolli A, Ashrafi E. Impact of patient engagement on healthcare quality: A scoping review. J Patient Exp. 2022 Sep 16;9:23743735221125439. https://doi.org/10.1177/23743735221125439

- Batra P, Dave DM. Revolutionizing healthcare platforms: the impact of AI on patient engagement and treatment efficacy. IJSR. 2024 Feb 5;13(2): 273–80. https://doi.org/10.21275/sr24201070211